VECTARA, a leader in the retrieval augmented generation (RAG) sector, has made developing generative AI applications more accessible with its new product, VECTARA PORTAL. It offers an open-source platform that enables users of all technical backgrounds to create AI applications that interact with their data seamlessly.

Unlike other commercial solutions that provide quick answers from documents, VECTARA PORTAL is unique in its user-friendly approach. With minimal setup, users can create AI-driven applications for search, summarization, or chat functionalities based on their datasets, all without writing any code.

Key Features and Use Cases

Vectara Portal is designed to empower non-developers to apply AI solutions across various business functions, such as searching through policy documents or processing invoices. However, its effectiveness is still being assessed, as it is currently in a limited beta testing phase with a small group of customers.

Ofer Mendelevitch, Vectara’s Head of Developer Relations, explains that the Portal is built on Vectara’s proprietary RAG-as-a-Service platform and is anticipated to gain substantial traction among non-developers. This expected adoption could lead to greater interest in Vectara’s more comprehensive enterprise-grade offerings. “We’re eager to see the innovative applications users will develop with Vectara Portal and believe the improved accuracy and relevance offered by their documents will showcase the full potential of Vectara’s enterprise RAG solutions,” Mendelevitch said.

How Vectara Portal Works

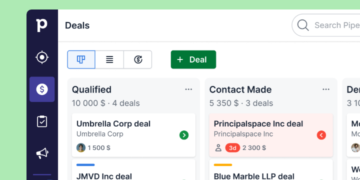

It is available as both a hosted application and an open-source tool under the Apache 2.0 license. To get started, users create a Portal account with their Vectara credentials and configure their profile using their Vectara ID, API Key, and OAuth client ID. Once the profile is set up, users can create a new portal by specifying essential details like the application’s name, description, and intended function, whether it’s a semantic search tool, a summarization app, or a conversational chat assistant. After setup, the portal is displayed on the Portal management page.

From this management page, users can access settings to upload documents, which are used to tailor the application to specific datasets. These documents are indexed by Vectara’s RAG-as-a-Service back-end, ensuring accurate, reliable responses without hallucinations.

“He continues saying that: “the platform offers a powerful retrieval engine, the innovative Boomerang embedding model, a multilingual re-ranker, reduced hallucinations, and overall higher quality responses to user queries in Portal. As a no-code solution, users can quickly build generative AI applications with just a few clicks.”

He further detailed that when users upload documents to a portal, the backend creates a “corpus” within the user’s main Vectara account, serving as a repository for all documents linked to that portal. When a query is submitted, Vectara’s RAG API searches the relevant corpus to provide the most accurate answer.

The system first identifies the most pertinent document segments needed to respond to the query, then feeds these segments into a large language model (LLM). Vectara Portal allows users to choose from various LLMs, including Vectara’s proprietary Mockingbird LLM and models from OpenAI.

“For customers subscribed to Vectara Scale, our premium offering, the Portal leverages the most advanced features, including high-performance LLMs,” Mendelevitch added. Applications created with the Portal are publicly accessible by default and can be shared via links, though users can restrict access to specific groups if desired.

Strategic Goals for Expanding Enterprise Adoption

With its no-code platform available as both a hosted solution and an open-source product, Vectara aims to enable more enterprise users to develop powerful generative AI applications for a wide range of use cases. The company expects this will increase user sign-ups and drive interest in its core RAG-as-a-Service platform, ultimately boosting conversion rates.

“RAG is a compelling use case for many enterprise developers, and we wanted to extend this capability to no-code builders, allowing them to fully experience the potential of Vectara’s comprehensive platform. Vectara Portal makes this possible, and we believe it will be an invaluable tool for product managers, general managers, and C-level executives to explore how Vectara can enhance their generative AI efforts,” Mendelevitch stated.

Vectara has secured over $50 million in funding and currently serves approximately 50 production customers, including Obeikan Group, Juniper Networks, Sonosim, and Qumulo.

This revised version provides a detailed explanation of Vectara’s technology and features, focusing on the platform’s usability, accessibility, and potential for broader enterprise adoption.